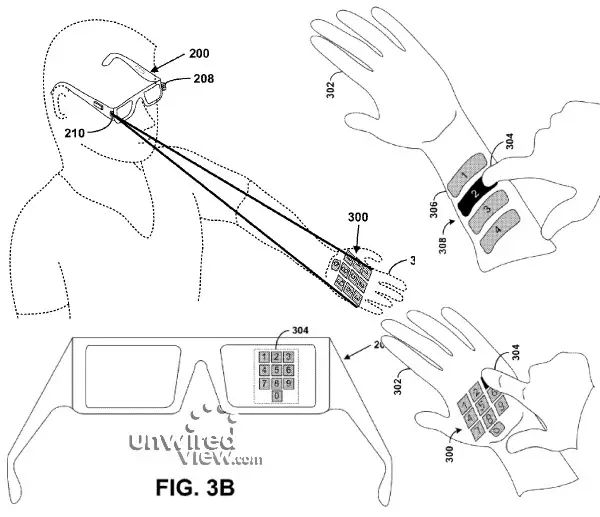

Google Project Glass is a pretty awesome, albeit uber-nerdy, concept that may or may not revolutionize mobile computing. But how, exactly, does one interact with the Android-based headgear? The most logical methods include voice control, gestures, or perhaps pairing with a smartphone, but a new patent application shows an intriguing method that Google could implement.

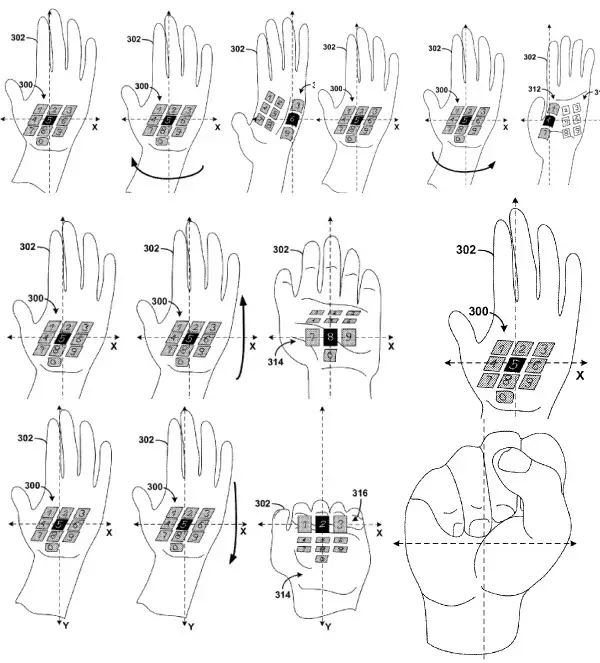

Yes, a laser projected virtual keyboard. As if wearing Google Glass couldn’t get any dorkier, the device might eventually beam forth an intangible keypad that can be interacted with on multiple levels. Aside from basic typing, Google proposes that the keyboard could perform different functions based on the way it is projected on a human hand, providing easy gestures for quick (quasi) hands-free input.

The specific technology needed to accomplish such a task isn’t new, but as Google presents it the current generation of laser-projected keyboards would need some major refinements. For this reason we doubt we will see the feature in the first iteration of Google Glass, but it very well could make future releases. Google, after all, is no stranger to releasing half-baked concepts to the public. I can see it now: Google Glass with Laser Keypad (beta).

[via UnwiredView]

Considering there is already a product on the market that is laser keyboard http://www.celluon.com/products.php it’s only a matter of time before it can be shrunk down far enough to fit within Google Glass

For SURE not gonna see this on the 1st set of Google Glasses but what an amazing and great idea to move forward with. Think about it…even if the user has to hold their hand 2ft away from their face to use the virual laser keypad that would be freakin AWESOME!!! You’d just raise your left hand up in front of your face and a proxy sensor would automatically turn the laser keypad on and then boom…you’d be able to dial on you ropen left hand with your right pointer finder or better I could be sitting here in my cubical with my Google Specs on and just by looking at my wall and saying “Keypad” a laser image appears and I’m able to call my buddy by bouncing a ball off each number!!! Oh man I love Google….if Google was a woman I swear i’d settle down.

Why does it have to actually project it onto your hand? Why can’t it just use the glasses display to overlay the image in your vision so that only you can see it?

Completely agree. Isn’t this the point of Glass, after all?

Exactly what I was thinking.

I think part of the problem with that might be the fact that the camera/laser/sensor needs some way to determine what and where you’re pressing. Projection on a surface is the obvious solution. If it’s only in your vision that means it’s being projected or displayed on the physical glass panel.

I’m no engineer but I can’t imagine a way for a sensor or camera, that isn’t pointed AT YOU to determine what you’re attempting to press. I mean, it would be like having a Wii, Kinect, or PS Move without that little sensor/camera that faces you. All you would need would be the remote. Even in movies the input module is projected outward, even if they are projected on thin air sometimes.

It should project with invisible infrared, and then use that to superimpose it on the display.

I’m no engineer either, but couldn’t they use finger tracking to detect where you pressed? The Kinect 2.0 already has this, just not applied to this scenario. If anything, a combination of the two using infared (or some sort of invisible virtual keypad) to track presses, while the actual keypad is displayed using augmented reality.

I think the technology is there to do it either way, it’s just shrinking it into glasses that’s difficult.

Using the glasses display and camera, you could virtually project it onto your arm or hand and it could detect fingers being in the way for X amount of seconds as a “click”. In theory this could be extremely easy, because when the display is first shown (when you put your arm or hand up) it basically takes a picture. Then it can use that image to compare to the next to see if anything in the area of the “button” has changed. Then it can calculate a percentage and if the number is high enough it’s interpreted as a click. Obviously it’s a little more complex than this (your arm won’t be completely still) but the general idea remains the same. The more ideal solution would be able to track arms/hands/fingers though, which is required anyway for gestures or to tell it to display the virtual keyboard.

If the keyboard is “projected” its laser projected, so it will be able to tell what you pressed from you breaking the beam. Its not just in your lens, its physically projected. I thought that was obvious from the article.

Yeah but what is it projected onto?

I really wish this sort of thing weren’t patentable, but I would expect Google will be good stewards.

I wonder what patents resulted from the “SixthSense” work 4 years ago, and who now controls them. It could cause a problem if the wrong people have them.

http://www.ted.com/talks/pattie_maes_demos_the_sixth_sense.html

I thought the name thing. But I recall it being 100% open source with no patents applied for. At least that’s what I got from the TED video.

Yeah but if Google doesn’t patent them, someone else will put for the the idea of using existing technology and ideas inside a new product and then Google would be locked out.

bring it on! future gadgets are getting real exciting :-)