At CES 2013, I showed you the crazy eyesight controlled computer that could become a Google Glass partner. Many of you had your doubts, but as we reported yesterday, a recently filed patent by Google illustrates some of the exact functionality we predicted Tobii could bring to Glass.

Look at your hand. Dialpad beams onto it. Press your hand. Number dialed. What the heck just happened?

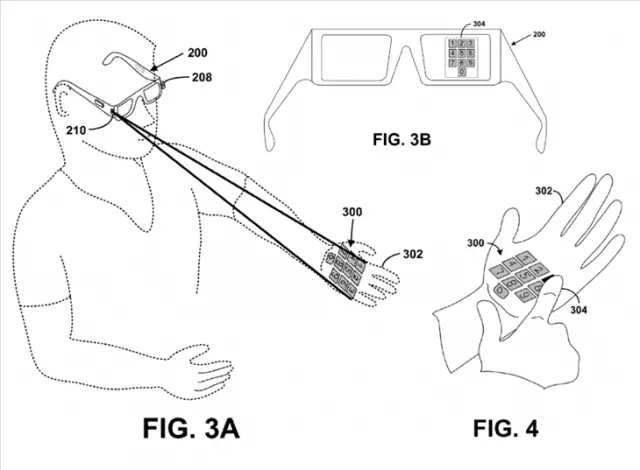

The functionality is a little bit different from Tobii because rather than tracking your eyes and correlating eye movement with a pressed button, Google Glass already knows where your eyes are looking because the glasses are pointed in that direction. But note number 304 where you’re visually seeing the display? Looks familiar.

Combine the technology that’s already working brilliantly in the Tobii display with the concept shown above and Google Glass could have something really interesting in store for us all. While the Glass possibilities are undoubtedly marvel, how do you activate all the ideas app developers could initiate? Do you need to provide voice commands? Will your phone be a remote control for them? What’s the deal? This type of interaction could solve that problem.

But this concept isn’t new as pointed out by Devin Coldewey of NBCNews Digital.

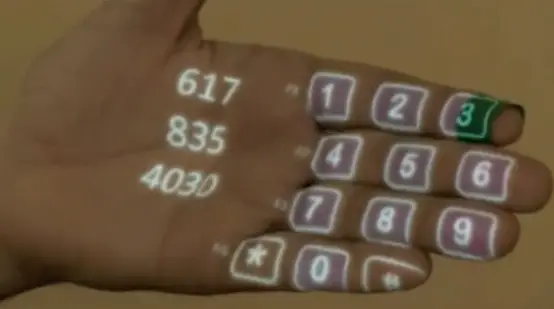

Above, you can see a 2009 video from TED discussing SixthSense technology. Last year, Microsoft showed off an eerily similar Kinect-ish concept that makes everything a touch screen. First let’s take a look at a screenshot from the SixthSense video shown two years ago:

Now how about Microsoft’s rendition from last year?

Uhoh, I can see where this is headed: patent court U-S-A. That being said, Google’s variation mounts the laserto a pair of glasses, cooperates with a glasses-mounted camera, and possibly incorporates eye-tracking technology (among other tech) to efficiently process user interactions. Crazy stuff.

Although the first Google Glass developer event is right around the corner, incorporating consumer-ready technology found in this patent isn’t likely within the next couple years. And therein lies the patent race debacle: if these glasses and this technology becomes popular, what parts of which patents are valid or first or overlapping? By the time these products generate corporate profits (if they do), the string of related patents will be so confusing that… well, things will seem like they haven’t changed.

I think it’s about that time. Time to start thinking of futuristic movie ideas and, instead of writing a screenplay, file patents based on that imaginary movie in hopes that someone else will one day try to patent it. On the contrary: the awesome, crazy, and exciting thing about Google is that, whereas other companies leave these ideas on the shelf as futuristic “concepts”, Google is actually making them a reality.

Pranav stated that his tech is and will be open source.

from what i’ve read, Google co-sponsored much of SixthSense’s early development via MIT’s Media Lab, so i don’t think things are as dire as one might think.

So, this is the future,…. hmm.

Holoband, hah?

been shown in movies for years. You can’t patent something freely created. The underlying technology and how it works is something different. If it is truly open source, then all aspects of it should be protected under OS licensing so ANY company, including google can’t patent it.

Apple begs to differ, Mr. Fancypants McLawyerson

What’s been show in movies is variety of devices that are implanted/tatoo’ed in one’s limbs and other body parts that anyone around can see. This idea is new – it keeps the keyboard itself away, even from a person staying next to you, because it’s not projected or displayed anywhere but your glasses. Truly remarkable idea,, fantastic for privacy.

Or you’ll just look like an idiot typing on a wall?..

Perhaps you can’t patent the idea but you can patent the technology behind it (if it doesn’t already exist)

I dont understand why when you are looking through a screen you would need to project anything onto a physical object. Instead why not just make it appear that an image or set of images is being projected in a certain spot on your body just by using the screen? This way we dont have to walk around looking at projected buttons on our hands like a bunch of dorks, and it would work the same way.

Because we wouldn’t look like dorks, we would look like uber-geeks with awesome powers.

It wouldn’t be social, for one, unless the other people were wearing google glass as well (loved the subway floor pong game, btw).

But yeah, for one person use, you don’t actually need to project an image onto real world objects. Simply simulating the projection by using the HUD of google glass would give the same effect. Then the surface itself could be virtual, “g.glass: simulate flat virtual surface at arm’s length.” Then you put your hands out and interact in space. 3D objects could also be simulated. You could work on a virtual Rubik’s cube or manipulate a 3D object for later 3D printing.

I imagine this is in the vision for this, but it just wasn’t spelled out here in this article. The TED video was from several years ago.

Hey, that’s my area code… 617.. Boston is in the house! Lol

This is pretty sick