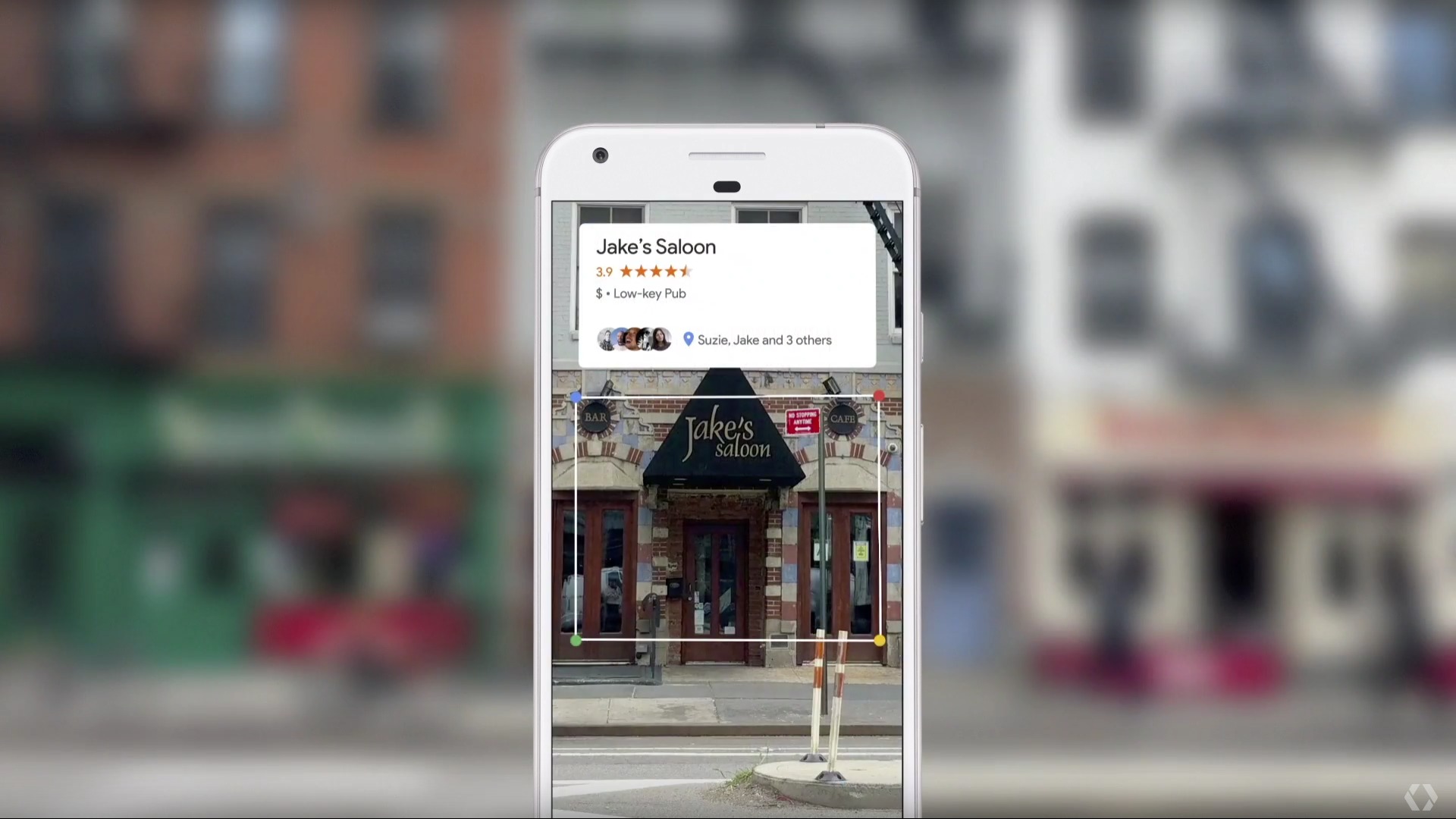

Lens is now rolling out to Google Assistant, just weeks after making its debut inside the Google Photos app. The feature, which lets you identify objects in the real world using your camera, is exclusive to Pixel and Pixel 2 smartphones for now.

Lens was unveiled at Google I/O back in May. Like its predecessor, Google Goggles, it uses visual analysis and machine learning to identify things like products and landmarks. It can also pick out information, like phone number and addresses, when pointed at signs and flyers.

Lens was initially available inside Google Photos, and now — just as Google promised — it is being integrated into the Assistant on Pixel devices. To use it, you simply tap the Lens button, which opens the camera, then tap on the device you wish to identify in the viewfinder.

This is almost exactly how Lens works inside the Photos app, except you don’t need to snap a photo before Lens can be used; it works in real-time.

Not every Pixel user can see Lens inside Assistant yet, but it’s on its way. It will likely be a few weeks before Google makes it available to all.

Comments