The Jetsons envisioned a future where a butler robot is quite possibly the most helpful and useful thing we’ve ever seen, but science fiction movies over the years have opened our eyes to the potential pitfalls of sentient robots. Isaac Asimov’s literature explaining the need to implement 3 core laws is probably the most popular example of that.

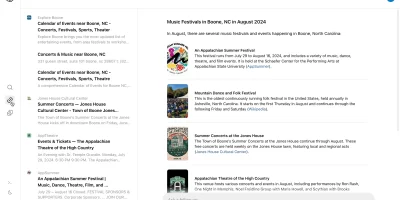

Believe it or not, Google thinks that there needs to be “rules” for AI too, but it’s not because they think we’re in for an unstoppable robot army in our future. As such, they’ve detailed 5 questions that need to be answered for any commercially available AI robotic product to take hold. Here they are in brief in the example of a cleaning robot:

- Avoiding Negative Side Effects: How can we ensure that an AI system will not disturb its environment in negative ways while pursuing its goals, e.g. a cleaning robot knocking over a vase because it can clean faster by doing so?

- Avoiding Reward Hacking: How can we avoid gaming of the reward function? For example, we don’t want this cleaning robot simply covering over messes with materials it can’t see through.

- Scalable Oversight: How can we efficiently ensure that a given AI system respects aspects of the objective that are too expensive to be frequently evaluated during training? For example, if an AI system gets human feedback as it performs a task, it needs to use that feedback efficiently because asking too often would be annoying.

- Safe Exploration: How do we ensure that an AI system doesn’t make exploratory moves with very negative repercussions? For example, maybe a cleaning robot should experiment with mopping strategies, but clearly it shouldn’t try putting a wet mop in an electrical outlet.

- Robustness to Distributional Shift: How do we ensure that an AI system recognizes, and behaves robustly, when it’s in an environment very different from its training environment? For example, heuristics learned for a factory workfloor may not be safe enough for an office.

As you can see, much of the questions don’t set off any red flags when it comes to robot rebellion. The problems they’ve identified have more to do with public safety and predictable behavior in relation to environmental variables and concerns. Some of these problems — if left unchecked — could lead to safety concerns, but it’s not because your cleaning robot developed a deep hatred for you overnight.

Of course, we’re never going to say never, but it seems we’re far off from the future that haunts everyone’s nightmares.

[via Google]

Comments