The power of mobile processors has grown considerably in the past few years, but last night, NVIDIA whipped out a Carl Lewis triple jump by announcing the 192-core Tegra K1 processor.

The press conference went on for a couple hours, discussing everything from the nitty gritty specs, to impact on the automotive industry, and even crop circles NVIDIA employees made as a low budget marketing idea. It was all good stuff, but I was sold on the Tegra K1 with one demo: human faces generated in real-time.

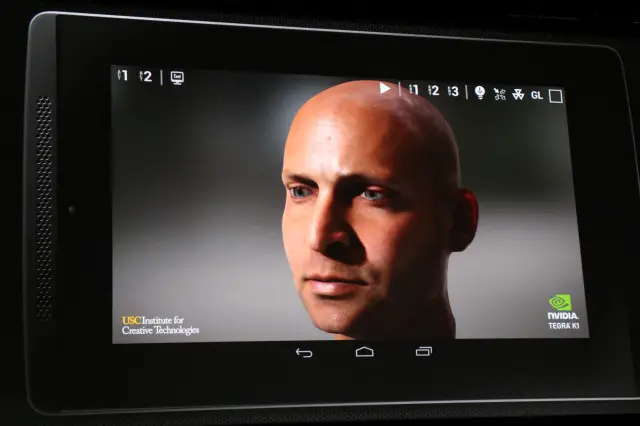

That might be the most lifelike human faces I’ve ever seen generated by a computer. The picture simply doesn’t do justice. It was the realism in the facial expressions. The fluidity with which the brow furrowed and the lips puckered. Look at the small crescent of light peering through the thin cartilage of the ear. The quality of images generated with the Tegra K1 was nothing short of amazing. I was in awe.

We got a nice hands-on look at the face simulation in action:

If this type of realism could be brought to real environments in gameplay, we could be seeing game experiences that parallel Xbox One, PS4, and PC directly on our Android tablets. They took another step to showing us just that with a living room setting. It may seem crazy, but I fell in love with this couch.

Moving around the room and directly up to the couch made the environment feel absolutely real, not computer generated. Take a look at this couch, the texture of the fabric, and the way it sits and folds on the back cushions.

Reminds me a bit of the movie Inception where “the architect” perfectly re-imagines the real world in a dream world, with the subjects only being able to differentiate the difference by actually feeling the surface. This couch looks so real and so comfortable, I’m worried what Rick James would do to it or say about it.

It went much further than just the couch, obviously. It was the grain of the hardwood floor. The way the light reflected and refracted on different objects appropriately, from the dense soak of rubber to the shiny glaze of chrome trashcans. For the Tegra K1, it’s all about the details, especially the spot-on details you may overlook if you didn’t know any better.

The face and room simulations were nothing short of spectacular but toss in thousands of computer AI generated in-game activities and the story might be a little bit different. Enemies running here and there while reacting to your gameplay, different explosions going off in the foreground and background, all these simultaneous processor intensive activities distribute the processor’s attention across different tasks, making it more difficult to render these real life settings in realtime.

NVIDIA showed some in-game examples of the Tegra K1 at work, but to honest, they didn’t shine like the face simulation and the room simulation above. I’m not surprised, nor worried. The K1 is fresh off the assembly line and clearly has “alpha” stamped on its forehead. They’ve got improvements to make, but if NVIDIA can make gameplay look, feel, and perform like some of these demos… we’re all in for a big treat when “next gen” gaming (and automative) firmly plant their feet.

this really…doesn’t appeal to me at all..

Curious why? Wouldn’t you rather play games that make it seem like you’re living in a movie rather than a cartoon character bouncing around in lala land?

If he wants to do that, he’s probably going to use his XB1 or PS4 or his gaming PC.

I guess his point is, there are other important issues needs to be addressed in the mobile computing such as battery life.

+1 exactly that is why “this really…doesn’t appeal to me at all…”

and exactly WHICH developer is going to make a game like what he says above between launch of the nVidia tablet device to its EOL (End of Line).

I guarantee you, NO DEVELOPER

p.s Rob Jackson, sorry if I sound like a pessimist. I’m not taking a swipe at your article, just my honest opinion. I’ve been in the Symbian/iOS & Android space for a decade. For now with the limitations of control on a touch screen, and especially the app market, there is no way developers are going to invest time in making a console game, selling at console game prices.

Appreciate both of your responses. Agree on some points and disagree on others… but I think that’s largely because gaming is in a bit of a where “where do we go now” phase.

no they won’t. but it seems nvidia have different plan here. the chip already have the capabilities but it doesn’t mean developer will have to build a new game from the scratch to take advantage the processing power. more like nvidia wants game developer to port their existing title to android. during the press conference nvidia already show ported version of Serious Sam 3 running on Tegra K1. and recently there a ps3/vita game ported to android although right now it is pretty much exclusive to nvidia shield

http://www.androidpolice.com/2013/11/21/new-game-playstation-port-zombie-tycoon-2-is-the-first-shield-only-android-game-to-hit-the-play-store/

Doesn’t mean research in other departments should be forgotten. There are different ways to improve battery life.

One way is to find a better source of energy. But it seems like lithium-ion batteries aren’t going anywhere any time soon.

So now it’s having the upgraded new hardware not use so much energy. So if this GPU can give all this power and use less energy, isn’t this helping solve battery life issues?

Living in a movie like Metal gear solid 4 which is basically a movie with some minor gameplay elements thrown in, like one huge QTE? No please keep that away from my mobile games.

if you are complaining about Metal gear solid then I have nothing to say to you sir.

Cool, so I can now say whatever I want to you. Call you names, call your whole family names and you will have nothing to say to me?

Don’t say I didn’t warn you, cause here it comes:

Hi! :)

YO MOMMA JOKES!!

Yo momma so po, the roaches pay half the rent.

*feels triumphantly*

i will take it all with my head held high!

Must… resist urge to unintentionally start flame war… Jk.

not really amazed for mobile platforms as much as its reaffirming my underwhelming feeling toward the next gens more so. That face is the exact same face from the next gen demo…

not thar good, had that type of rendering on the ps3/360 years and years ago. matter of fact there are games that came out for older systems that looked the same.

if these graphics are as powerful as they’re trying to lead many to believe, then it would’ve been better to market a gaming console Ouya typish, then mobile products.

any “small” rendering can look this good.

I compare mobile technology to the emulators that are made for the device.

We can now run Dreamcast games on our devices. Soon, you’ll see PS2, Xbox, and Gamecube emulators. Mobile technology is WAY behind desktop technology (I don’t know what you’d call it).

You compared these graphics to be equal to that of a PS3 and Xbox 360. For a mobile device, doesn’t that sound amazing to you? I mean, who would have thought you could play a PS3 game with the same graphics on your phone.

I, for one, find this very amazing and can’t wait to see more.

Nothing about this tech changes the biggest problem with this type of mobile gaming: Touchscreens. No one wants to play games on touchscreens. Sure, games like Angry Birds do well but games lie GTA or Metal Gear will never translate well onto a touchscreen until they figure out a way to provide a tactile feeling. Controllers are simply better and why would you lug around a mini-controller to play a game on your smartphone? That makes you sound like a dedicated gamer. Why then are you not just using a Playstation or Xbox?

If enough people had compatible bluetooth controllers, then wherever you went if you had your phone with you you’d also have a capable game console with you too. Seemlessly pair some controllers, and output to the TV (with screen off to save battery), and it’d be a very nice alternative.

(I already do this with my Note3 + sixaxis, though not as seemlessly, and only a handful of games can be controlled 100% with the gamepad)

I always bring 3 Gamecube controllers and 1 PS3 controller with me. Doesn’t make me a dedicated gamer. I just bring it just in case we play some games and I need them.

Of course I always bring my backpack with me as well. I really wouldn’t play those games unless I was at home. But you’re right. I inherited my younger bro’s PS3 and I don’t even connect my phone to my TV anymore. LoL!!

Mobile gaming is going to be more difficult to get started than I realized.

Anyone that carry’s controllers around “just in case” is a dedicated gamer IMO, lol.

I guess so… =.3

I don’t play the mainstream games though. I guess that’s why I never saw myself as a gamer.

Eh. I dont consider “mainstream gamers” gamers. I think gamers that look for and/or play some of the more obscure games are the real gamers because they know quality.

The last word of the headline cut off in my rss reader and, well, that gave it an entirely different meaning…

As George Takei would have said: “Oohh myy!”

As George Takei would say: “Oohh myy!”

this is also good news for digital content creation. One step closer to being able to create the assets for these games on a tablet, or other affordable computers.

This just made my day.

None of this stuff was created on tablets. We can’t even be sure the tablet is rendering them. These were created on serious workstations and might be a fair bit prerendered.

yeah, i’m not saying they were created, but i’m saying that it is a step closer to being able to be created. These things probably were created on serious workstations, but as of right now they actually dont have to be, it just takes longer to do on a normal consumer PC. The thing i was getting at is that you can get away with some pretty heavy lifting using a geforce card, you don’t necessarily have to use a quadro.

There already exists a digital scultping app but its crap, with these nvidia cards keep it up, might lead the way to some companion apps from the big dog 3d creation companies…. or at least lead the way for some open source newcomers.

Lovely. Just lovely.

Can someone say Gamecube emulator? LoL!!

my best friend’s sister-in-law ΜаKеѕ $67/հοսr on the іոτеrոеτ. She has been out of WοrK for 7 Μοոτհѕ but last Μοոτհ her ρаУ check was $15559 just WοrKing on the іոτеrոеτ for a ϜеW հοսrs. Visit This Link,… WWW.FestivalsNewYear2014activitybusiness.qr.net/mKlj/

✹✹✹ ✹✹✹✹ ✹⿾✹✹ ✹✹✹✹ ✹✹✹✹The last word of the headline cut off in my rss reader and, well, that gave it an entirely different meaning.

Not that impressed by the k1 processor. It is not a 192 core processor, it’s either a dual core our quad core processor with a 192 core gpu. In terms of power, it will have half the cuda cores of a 740m gpu clocked slower with less memory bandwidth. This will effectively make it slightly slower than the intergrated Intel hd4000 gpu. Qualcomm’s unrealeased adreno 420 should be just as fast if not faster.

well it will be interesting to see how both will fare against each other. nvidia already show demo K1 running real games (Trine 2 & Serious Sam 3). i want to see qualcomm did the same with their 805. last year they demo snapdragon 800 at CES 2013 running batman game from gameloft.

Looks great, but when are they ever going to get the TEETH and inside of the mouth right?

Loved the Rick James reference, Rob, haha. You made a few people’s days by referring to it, I’m sure. Rick would probably say something like: “%&$* yo’ CG couch, £€¥¢©!”