Google Lens is about to get a lot smarter with 3D modelling

Google is live on stage at Google I/O 2019 and has just shown off a demo that shows how the power of machine learning and AR technology is expanding what we can use Google Lens for.

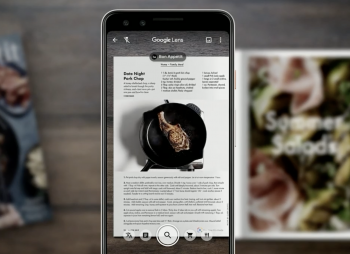

Google Lens has been able to identify text on a page for a while, but this technology is being expanded to link to related search results for the detected content. For example, pointing at a recipe book will show a wealth of information relevant to that real world item.

In addition, the technology is being built natively into Google Go to bring augmented text recognition to budget devices. This means that you can use Google Lens to translate a sign, for example, into your native language and hear it dictated back to you and now this is available to more devices than ever.

Google Lens is also being expanded to bring 3D modeling to the real-world by using AR technology to bring your search results to the environment in front of you. The demo showcased a search for a human muscle that was then overlayed onto a desk right in front of the user allowing better visualization and much better use of the search results by allowing zooming and rotating.

Google has taken Lens to the next level by not only bringing it to more people with Google Go support but using AR to ingest real-world information and augmenting Google’s vast information database on top of the object in real-time.