Project Soli uses radar to bring hand gestures to devices

The folks at Google ATAP are doing it again. ATAP is responsible for some of the crazier things you’ve seen from Google in the past. Things like Project Ara and Project Jacquard. ATAP shoots for the moon, and their latest shot includes interacting with devices through radar.

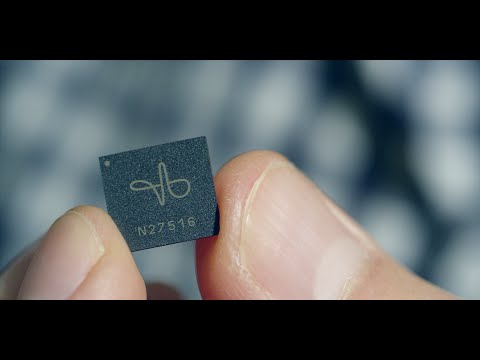

Project Soli uses radar to recognize gestures and fine hand movements. Radar is the technology you’ve probably seen in submarines on TV and for tracking weather patterns. ATAP built this tech into a chip so small that they can use it with smartwatches. In the video above, you can see the watch detecting hand movements from a few inches away. Gestures control the UI without even touching it.

Project Soli isn’t just for smartwatches. ATAP also partnered with JBL to make a speaker. You can snap your fingers to turn music on or off and more. It’s easy to see the potential for something like Project Soli. We’re surrounded by devices that use touchscreens and buttons. Both require physical touches to operate. Expanding a devices ability to 3D space opens it up to do a lot of cool things.

ATAP will release a DevKit sometime next year for Project Soli. Is this something you would use in your everyday life?

[via Google]