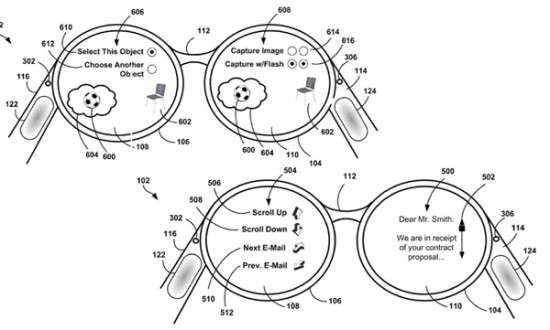

Google’s Project Glass has been one of the most interesting products we have seen in a long time. It is a unique new technology that aims to change the way we interact with our gadgets and the world. But said wearable device has also brought some confusion – Will we control the device by moving our heads? Maybe by talking to it? In both cases, we would look like we are crazy. That is why Google has also integrated a trackpad.

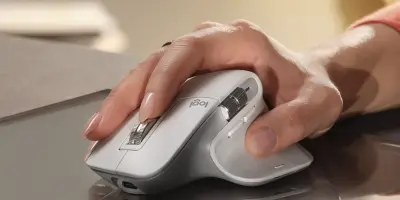

Because we do not know when Apple, Microsoft and Oracle will throw the next punch, Google has just acquired a patent for this trackpad technology from the USPTO. The patent describes said touch sensors to be located in the sides, with support for scrolling, tapping, and even configuring settings for left-handed users. These are also said to be transparent for improved peripheral vision.

It is a relief to learn that these will have a direct, manual interaction method. Though the dream of controlling our gadgets via voice and gestures seems fun, some can consider it a gimmick. Hell, many of you consider the whole product a gimmick already. Regardless, you don’t always want to be talking to your glasses.

The question one needs to ask is what would be convenient for me? To be hands free, or to use hand gestures or even head movement or eye movement, and you can go on, is something of what the future demands. Think of the day when its totally virtual. Options are limitless, use will demand its function.

I think eye movement would be a bit complicated. Our eyes are constantly moving, we are winking an blinking all the time, and there are other actions we really have little control over.

this stuff will be really good only when it interacts directly with the brain via brain waves. then we can THINK what we want it to do and it’s done… until then, its going to be awkward to control

Good point!

I haven’t read much into this unfortunately, so I’m left wondering if these also recognize gesture controls (such as waving your hands in front of you a la Minority Report) while Augmented Reality is displayed on the glasses ?

I don’t know, but now that I read your post, I SOOOO want that!

I really hope these glasses allow us developers to create apps that constantly use the camera. For example, using hand gestures in front of your eyes to do certain actions.

I believe eye movement control and head movement are already in us in some military applications of course you might look like you have a nervous tick every time you text but pretty sure it can be done