Sometimes as a fully-abled person, it can be easy to take the things we do using our body for granted. This is a very different story for those who might have disabilities and can’t interact with simple gadgets like smartphones the way the rest of us can, which is why accessibility features are important, critical we would say.

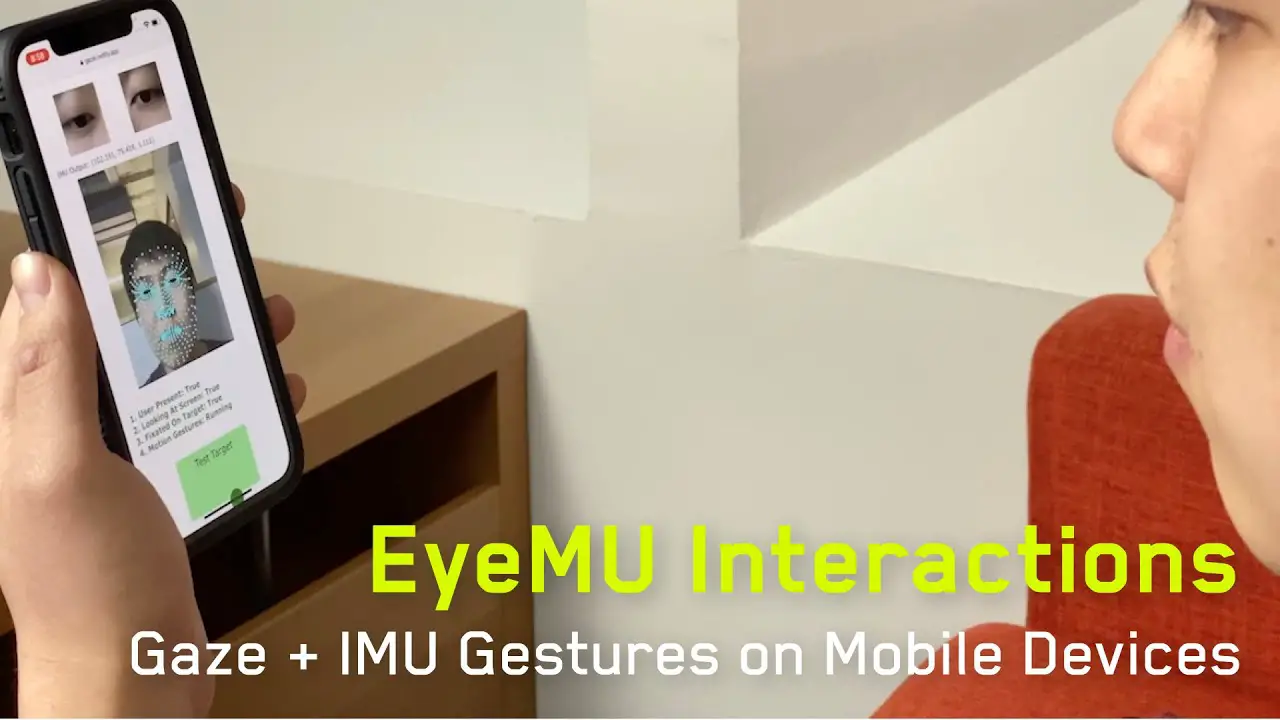

That being said, it seems that the researchers over at Carnegie Mellon University are developing a system called EyeMU that will take accessibility to the next level. As the name implies, this will involve our eyes where the system can detect our eye movement and use it as a method of interacting with elements on our smartphone screens.

Using eyes to interact with devices isn’t new. A couple of years ago, Google launched a similar initiative where users could use their eyes to “select” words and phrases to be spoken. The main difference is that with EyeMU, it will allow users to interact with the entire device.

This includes selecting and opening notifications, returning to apps, selecting photos, and more. To prevent users from accidentally performing actions they didn’t mean to, the researchers have paired eye movement together with hand movements, so different hand gestures will act as confirmations or dismissals.

EyeMU hasn’t been launched in the mainstream yet as we imagine there’s still quite a bit of work that needs to be done, but it does show some of the potential accessibility features we might be able to expect in the future.

Source: Android Police

Comments