Lens blur effects seem to be the latest trend in smartphone cameras. An attempt at simulating larger DLSR cameras with their big lenses and apertures, smartphones like the HTC One M8 or Samsung Galaxy S5 are now using a mixture of software and hardware to help create that soft depth of field, otherwise known as “bokeh.”

For the One M8, HTC achieves a shallow depth of field by using their “Duo Camera” system: 2 cameras that work like the human eye that help the phone distinguish what’s near and far, allowing users to go back into a photo and refocus as necessary. As we mentioned in our review, the feature seems to be hit or miss, rarely working out the way it would in a larger SLR.

With Samsung, their implementation is a bit different for the Galaxy S5 in a feature they call “selective focus.” The Gs5 camera quickly focuses close, then far, allowing the user to go back and refocus where they’d like, creating extra bokeh for a professional look. Again, just as we saw with HTC, the results are a mixed bag.

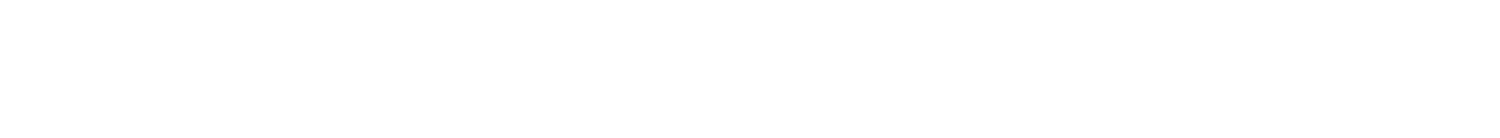

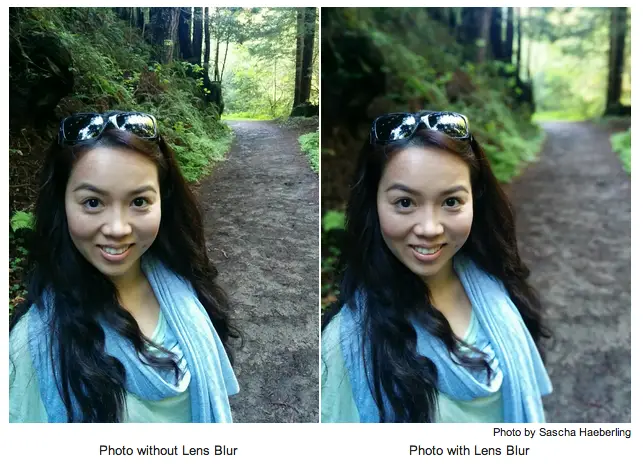

In the newly released Google Camera app (now available on Google Play), we now see Google taking a stab at the DoF issue, albeit approaching it a bit different from what we’re seeing with HTC or Samsung. Once again, it’s a combination of hardware and software working together for a feature they call Lens Blur. When taking a picture using Lens Blur, the user simply sweeps their camera up from the subject, somehow communicating back to the phone what’s near and far. To help detail exactly what the heck is going on behind the scenes, Google detailed everything in a blog post.

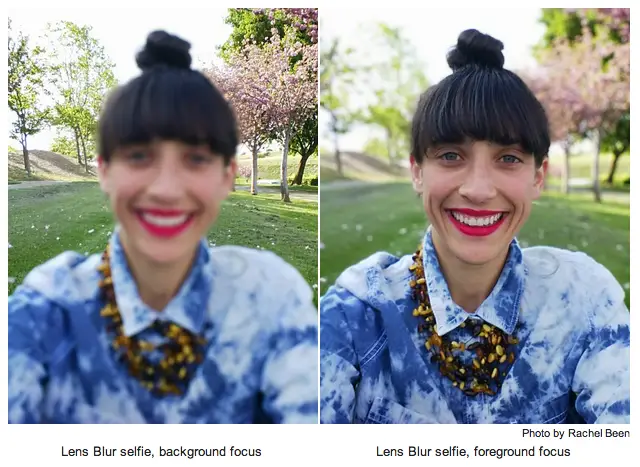

Apparently, Lens Blur uses much the same 3D mapping technology Google already uses for Google Maps Photo Tour and Google Earth — only the algorithm is shrunken down for your smartphone. When sweeping the camera up, your smartphone is taking a whole bunch of pictures, and Lens Blur uses an algorithm to convert that information into a 3D model. Lens Blur then estimates depth, as shown in the black and white image (above), by triangulating the position of the subject in relation to the background. Pretty neat.

It’s all pretty damn technical, and it’s mind blowing the amount of work that goes into making something as seemingly “simple” as Lens Blur work on your mobile device (even if it’s essentially just the of by-product of a much bigger technology). You can read up more on the technicals behind Lens Blur by visiting the link below.

What google does with one camera, HTC has to do with two.

i don’t know if you have ready any of the reviews from other sites on the gs5… but on the ones i have read, although the gs5 camera can do background blurring with one camera, it’s significantly more accurate on HTC’s. Also with HTC’s you can do it with the tap of a button, and according to the guy who posted at the top of the page, the process is much more tedious with purely software algorithms.

HTC’s duo camera going down in flames

Dear Google,

Give me back all of the features that you removed. I’ll give you back Lens Blur in exchange, even though I like it a lot.

Sincerely,

Anybody who used Timelapse and snapping photos while recording a video and any of the previous Scenes.

If i’m not mistaken, you can uninstall the update, since the camera app is a core application like, for example Google+.

That’s exactly what I did. I’m hopeful that everything found in the /strings are correct and that we’ll all be seeing an update soon with some of these features back!

I have a nexus 5 and the m8 and its definitely a much faster and easier process on the m8. The m8 all you do is snap a pic which takes a fraction of a second at thats it. With the nexus 5(or using this app on any kit kat device) you first have to select that option then take the pic but once you initially take the pic you then have to slowly move the camera until it completes the process. If you move too fast or shake too much you will have to start all over again. In a controlled environment the n5 may be able to pull off slightly better blur effects but in the real world the m8 got it beat for sure when it comes to this feature.

Yeah, but having it available on any KitKat device and it’s not exclusive to a single device makes that extra 3 seconds worth it

Let’s see how long it takes apple to copy this one and claim it’s theirs.

Just tried this twice. First time was successful enough that I went looking for something to shoot worth posting… and the software messed up. So, it seems hit or miss also.

Chris…there you go with the “bait and switch!” Put a cute girl on the cover and as soon as peeps click through…BAM, the disappointment lies in waiting.

Okay, so it wasn’t just me. Chrome was running slow, so I just restarted the browser. I’m over here looking for the article. LoL!!

I’m like, “Oh, they changed that homely lady”.

Nope. =.=

my eye’s are slightly irritated from having to gaze upon the less that attractive female pictured in this article. yes, I am somewhat shallow. Come at me.

I just got the new HTC One on 4/11 and have been testing the defocus feature a ton. I actually think it’s much better than “hit or miss.” I actually think it works pretty amazing, and based on my Instagram responses, so does everyone else. I did a side-by-side comparison from the GS5 and the Sony XperiaS1, everyone involved said the results were much better on the One. There are specific instances where it’s not perfect, like if there is a gap between things in the foreground, ex: my bent arm attached to my hip. The software generally doesn’t understand that gap is in the background. But by comparative analysis, the results from the HTC One are superior.

I’ve created a parallax viewer for lens blur photos. It’s an open source web app available at http://depthy.stamina.pl/ . It lets you extract the depthmap, works on chrome with webgl and looks pretty awesome on some photos.